MiniMax Releases M2.1: An Enhanced M2 Version with Features like Multi-Coding Language Support, API Integration, and Improved Tools for Structured Coding

Just months after releasing M2—a fast, low-cost model designed for agents and code—MiniMax has introduced an enhanced version: MiniMax M2.1.

M2 already stood out for its efficiency, running at roughly 8% of the cost of Claude Sonnet while delivering significantly higher speed. More importantly, it introduced a different computational and reasoning pattern, particularly in how the model structures and executes its thinking during complex code and tool-driven workflows.

M2.1 builds on this foundation, bringing tangible improvements across key areas: better code quality, smarter instruction following, cleaner reasoning, and stronger performance across multiple programming languages. These upgrades extend the original strengths of M2 while staying true to MiniMax’s vision of “Intelligence with Everyone.”

Strengthening the core capabilities of M2, M2.1 is no longer just about better coding—it also produces clearer, more structured outputs across conversations, documentation, and writing.

Built for real-world coding and AI-native teams: Designed to support everything from rapid “vibe builds” to complex, production-grade workflows.

Goes beyond coding: Produces clearer, more structured, and higher-quality outputs across everyday conversations, technical documentation, and writing tasks.

State-of-the-art multilingual coding performance: Achieves 72.5% on SWE-Multilingual, outperforming Claude Sonnet 4.5 and Gemini 3 Pro across multiple programming languages.

Strong AppDev & WebDev capabilities: Scores 88.6% on VIBE-Bench, exceeding Claude Sonnet 4.5 and Gemini 3 Pro, with major improvements in native Android, iOS, and modern web development.

Excellent agent and tool compatibility: Delivers consistent and stable performance across leading coding tools and agent frameworks, including Claude Code, Droid (Factory AI), Cline, Kilo Code, Roo Code, BlackBox, and more.

Robust context management support: Works reliably with advanced context mechanisms such as Skill.md, Claude.md / agent.md / cursorrule, and Slash Commands, enabling scalable agent workflows.

Automatic caching, zero configuration: Built-in caching works out of the box to reduce latency, lower costs, and deliver a smoother overall experience.

To get started with MiniMax M2.1, you’ll need an API key from the MiniMax platform. You can generate one from the MiniMax user console.

Once issued, store the API key securely and avoid exposing it in code repositories or public environments.

Installing & Setting up the dependencies

MiniMax supports both the Anthropic and OpenAI API formats, making it easy to integrate MiniMax models into existing workflows with minimal configuration changes—whether you’re using Anthropic-style message APIs or OpenAI-compatible setups.

from getpass import getpass

os.environ[‘ANTHROPIC_BASE_URL’] = ‘https://api.minimax.io/anthropic’

os.environ[‘ANTHROPIC_API_KEY’] = getpass(‘Enter MiniMax API Key: ‘)

With just this minimal setup, you’re ready to start using the model.

Sending Requests to the Model

MiniMax M2.1 returns structured outputs that separate internal reasoning (thinking) from the final response (text). This allows you to observe how the model interprets intent and plans its answer before producing the user-facing output.

client = anthropic.Anthropic()

message = client.messages.create(

model=”MiniMax-M2.1″,

max_tokens=1000,

system=”You are a helpful assistant.”,

messages=[

{

“role”: “user”,

“content”: [

{

“type”: “text”,

“text”: “Hi, how are you?”

}

]

}

]

)

for block in message.content:

if block.type == “thinking”:

print(f”Thinking:\n{block.thinking}\n”)

elif block.type == “text”:

print(f”Text:\n{block.text}\n”)

The user is just asking how I am doing. This is a friendly greeting, so I should respond in a warm, conversational way. I’ll keep it simple and friendly.

Text:

Hi! I’m doing well, thanks for asking! 😊

I’m ready to help you with whatever you need today. Whether it’s coding, answering questions, brainstorming ideas, or just chatting, I’m here for you.

What can I help you with?

What makes MiniMax stand out is the visibility into its reasoning process. Before producing the final response, the model explicitly reasons about the user’s intent, tone, and expected style—ensuring the answer is appropriate and context-aware.

By cleanly separating reasoning from responses, the model becomes easier to interpret, debug, and trust, especially in complex agent-based or multi-step workflows, and with M2.1 this clarity is paired with faster responses, more concise reasoning, and substantially reduced token consumption compared to M2.

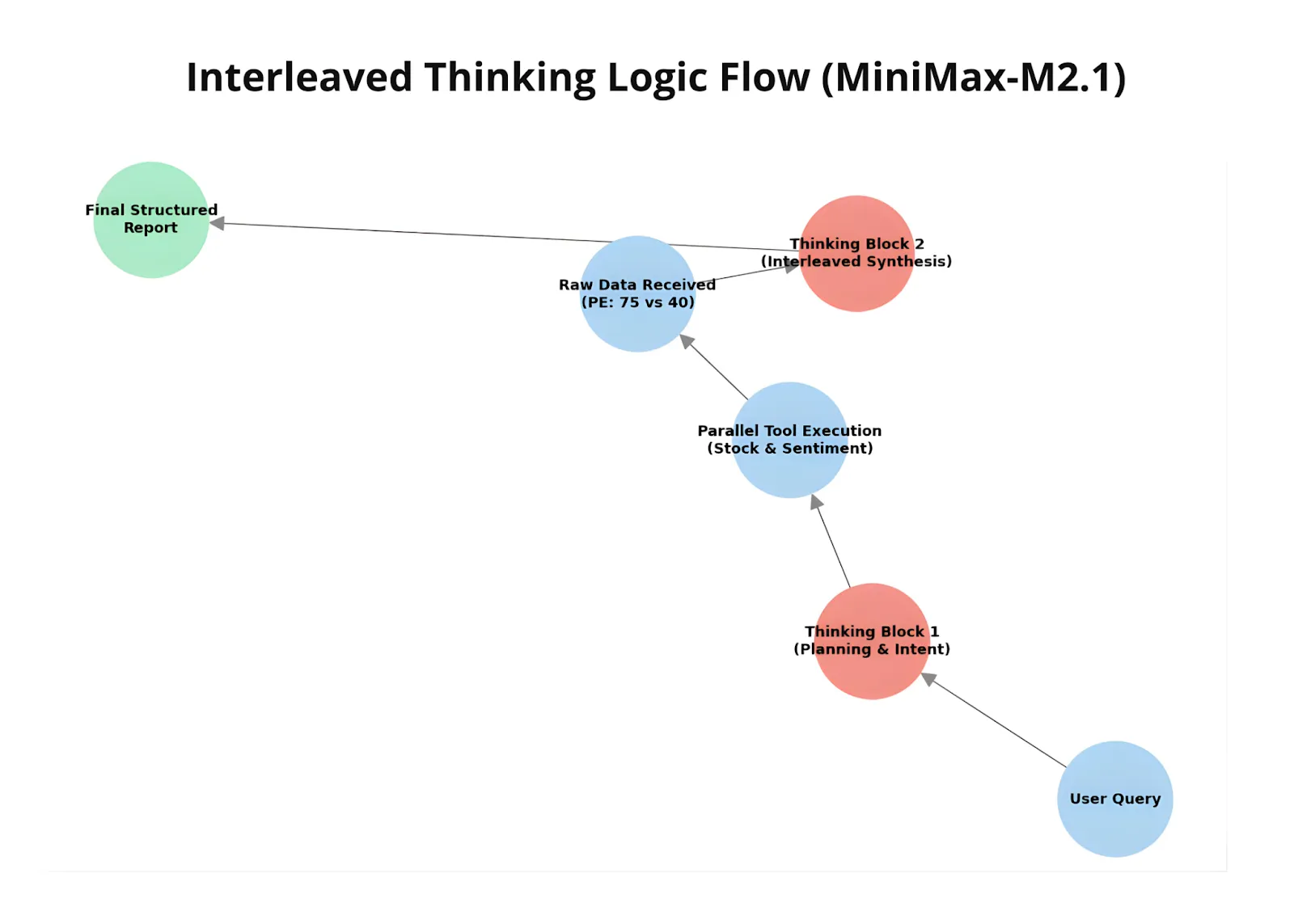

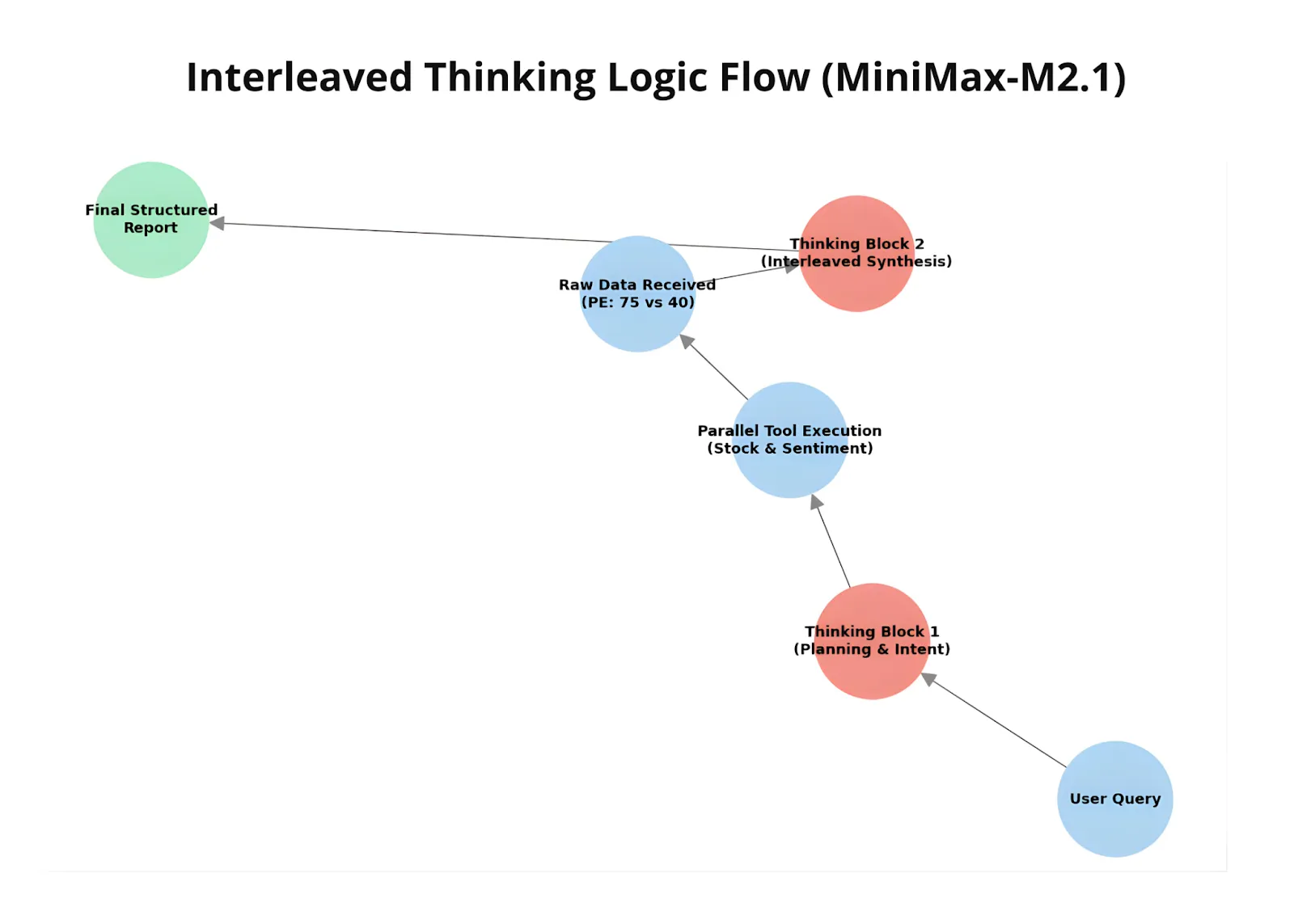

MiniMax M2 stands out for its native mastery of Interleaved Thinking, allowing it to dynamically plan and adapt within complex coding and tool-based workflows, and M2.1 extends this capability with improved code quality, more precise instruction following, clearer reasoning, and stronger performance across programming languages—particularly in handling composite instruction constraints as seen in OctoCodingBench—making it ready for office automation.

To evaluate these capabilities in practice, let’s test the model using a structured coding prompt that includes multiple constraints and real-world engineering requirements.

client = anthropic.Anthropic()

def run_test(prompt: str, title: str):

print(f”\n{‘=’*80}”)

print(f”TEST: {title}”)

print(f”{‘=’*80}\n”)

message = client.messages.create(

model=”MiniMax-M2.1″,

max_tokens=10000,

system=(

“You are a senior software engineer. ”

“Write production-quality code with clear structure, ”

“explicit assumptions, and minimal but sufficient reasoning. ”

“Avoid unnecessary verbosity.”

),

messages=[

{

“role”: “user”,

“content”: [{“type”: “text”, “text”: prompt}]

}

]

)

for block in message.content:

if block.type == “thinking”:

print(“🧠 Thinking:\n”, block.thinking, “\n”)

elif block.type == “text”:

print(“📄 Output:\n”, block.text, “\n”)

PROMPT= “””

Design a small Python service that processes user events.

Requirements:

1. Events arrive as dictionaries with keys: user_id, event_type, timestamp.

2. Validate input strictly (types + required keys).

3. Aggregate events per user in memory.

4. Expose two functions:

– ingest_event(event: dict) -> None

– get_user_summary(user_id: str) -> dict

5. Code must be:

– Testable

– Thread-safe

– Easily extensible for new event types

6. Do NOT use external libraries.

Provide:

– Code only

– Brief inline comments where needed

“””

run_test(prompt=PROMPT, title=”Instruction Following + Architecture”)

This test uses a deliberately structured and constraint-heavy prompt designed to evaluate more than just code generation. The prompt requires strict input validation, in-memory state management, thread safety, testability, and extensibility—all without relying on external libraries.

By combining architectural decisions with multiple non-trivial constraints, the prompt operates at a medium-to-high complexity level, making it well-suited for assessing how effectively MiniMax M2.1 follows instructions, reasons through design trade-offs, and produces production-quality code rather than isolated snippets.

Model Reasoning & Output

The model reasons through key architectural trade-offs before coding, carefully balancing flexibility, memory usage, and extensibility. It evaluates multiple approaches to event aggregation and deliberately chooses raw event storage to enable future extensions without modifying ingestion logic.

Thread safety is explicitly handled through locking, and strict input validation is planned to ensure data correctness, reflecting a real-world, engineering-first mindset rather than jumping straight to implementation.

This thoughtful reasoning is reflected in the final output, which demonstrates strong code quality through clear structure, meaningful naming, type hints, and a thread-safe design aligned with production standards. The solution follows all prompt constraints accurately—covering validation, in-memory aggregation, extensibility, and the absence of external dependencies—while maintaining clean, focused logic that avoids unnecessary complexity and remains easy to maintain.

from typing import Dict, List, Any

class EventProcessor:

“””

Thread-safe event processor that aggregates user events in memory.

Validates input strictly and supports easy extension for new event types.

“””

def __init__(self) -> None:

# Stores events per user: user_id -> list of event dictionaries

self._user_events: Dict[str, List[Dict[str, Any]]] = {}

# Lock for thread-safe access

self._lock = threading.Lock()

def ingest_event(self, event: dict) -> None:

“””

Validate and ingest a single event.

Strictly validates types and presence of required keys.

“””

# Validate event is a dictionary

if not isinstance(event, dict):

raise ValueError(“Event must be a dictionary”)

# Validate required keys and their types

required_keys = {

‘user_id’: str,

‘event_type’: str,

‘timestamp’: (str, int, float) # Accept string or numeric timestamp

}

for key, expected_type in required_keys.items():

if key not in event:

raise ValueError(f”Missing required key: ‘{key}'”)

if not isinstance(event[key], expected_type):

raise ValueError(f”Key ‘{key}’ must be of type {expected_type.__name__}”)

# Thread-safe event storage

with self._lock:

user_id = event[‘user_id’]

if user_id not in self._user_events:

self._user_events[user_id] = []

self._user_events[user_id].append(event)

def get_user_summary(self, user_id: str) -> dict:

“””

Generate summary for a specific user.

Returns aggregated event counts and timestamps.

“””

if not isinstance(user_id, str):

raise ValueError(“user_id must be a string”)

with self._lock:

user_events = self._user_events.get(user_id, [])

# Aggregate event counts

event_counts = {}

timestamps = []

for event in user_events:

event_type = event[‘event_type’]

event_counts[event_type] = event_counts.get(event_type, 0) + 1

timestamps.append(event[‘timestamp’])

return {

‘user_id’: user_id,

‘total_events’: len(user_events),

‘event_counts’: event_counts,

‘timestamps’: sorted(timestamps) if timestamps else []

}

“`

**Key Design Choices:**

1. **Thread Safety**: Uses `threading.Lock` to protect shared state during concurrent access

2. **Input Validation**: Strict type checking for required keys with clear error messages

3. **Extensibility**:

– New event types automatically handled by dynamic counting

– Easy to add new aggregations in `get_user_summary`

4. **Testability**: Clear separation of concerns, easy to mock for unit tests

5. **Memory Efficiency**: Stores only essential data (event dictionaries)

**Usage Example:**

“`python

processor = EventProcessor()

# Ingest events

processor.ingest_event({

‘user_id’: ‘user123’,

‘event_type’: ‘login’,

‘timestamp’: ‘2023-01-01T10:00:00Z’

})

# Get user summary

summary = processor.get_user_summary(‘user123’)

print(summary)

Let’s now see MiniMax M2.1’s interleaved thinking in action. We ask the model to compare two organizations based on P/E ratio and sentiment, using two dummy tools to clearly observe how the workflow operates.

This example demonstrates how M2.1 interacts with external tools in a controlled, agent-style setup. One tool simulates fetching stock metrics, while the other provides sentiment analysis, with both returning locally generated responses. As the model receives these tool outputs, it incorporates them into its reasoning and adjusts its final comparison accordingly.

Defining the tools

import json

client = anthropic.Anthropic()

def get_stock_metrics(ticker):

data = {

“NVDA”: {“price”: 130, “pe”: 75.2},

“AMD”: {“price”: 150, “pe”: 40.5}

}

return json.dumps(data.get(ticker, “Ticker not found”))

def get_sentiment_analysis(company_name):

sentiments = {“NVIDIA”: 0.85, “AMD”: 0.42}

return f”Sentiment score for {company_name}: {sentiments.get(company_name, 0.0)}”

tools = [

{

“name”: “get_stock_metrics”,

“description”: “Get price and P/E ratio.”,

“input_schema”: {

“type”: “object”,

“properties”: {“ticker”: {“type”: “string”}},

“required”: [“ticker”]

}

},

{

“name”: “get_sentiment_analysis”,

“description”: “Get news sentiment score.”,

“input_schema”: {

“type”: “object”,

“properties”: {“company_name”: {“type”: “string”}},

“required”: [“company_name”]

}

}

]

running = True

print(f”👤 [USER]: {messages[0][‘content’]}”)

while running:

# Get model response

response = client.messages.create(

model=”MiniMax-M2.1″,

max_tokens=4096,

messages=messages,

tools=tools,

)

messages.append({“role”: “assistant”, “content”: response.content})

tool_results = []

has_tool_use = False

for block in response.content:

if block.type == “thinking”:

print(f”\n💭 [THINKING]:\n{block.thinking}”)

elif block.type == “text”:

print(f”\n💬 [MODEL]: {block.text}”)

if not any(b.type == “tool_use” for b in response.content):

running = False

elif block.type == “tool_use”:

has_tool_use = True

print(f”🔧 [TOOL CALL]: {block.name}({block.input})”)

# Execute the correct mock function

if block.name == “get_stock_metrics”:

result = get_stock_metrics(block.input[‘ticker’])

elif block.name == “get_sentiment_analysis”:

result = get_sentiment_analysis(block.input[‘company_name’])

# Add to the results list for this turn

tool_results.append({

“type”: “tool_result”,

“tool_use_id”: block.id,

“content”: result

})

if has_tool_use:

messages.append({“role”: “user”, “content”: tool_results})

else:

running = False

print(“\n✅ Conversation Complete.”)

During execution, the model decides when and which tool to call, receives the corresponding tool results, and then updates its reasoning and final response based on that data. This showcases M2.1’s ability to interleave reasoning, tool usage, and response generation—adapting its output dynamically as new information becomes available.

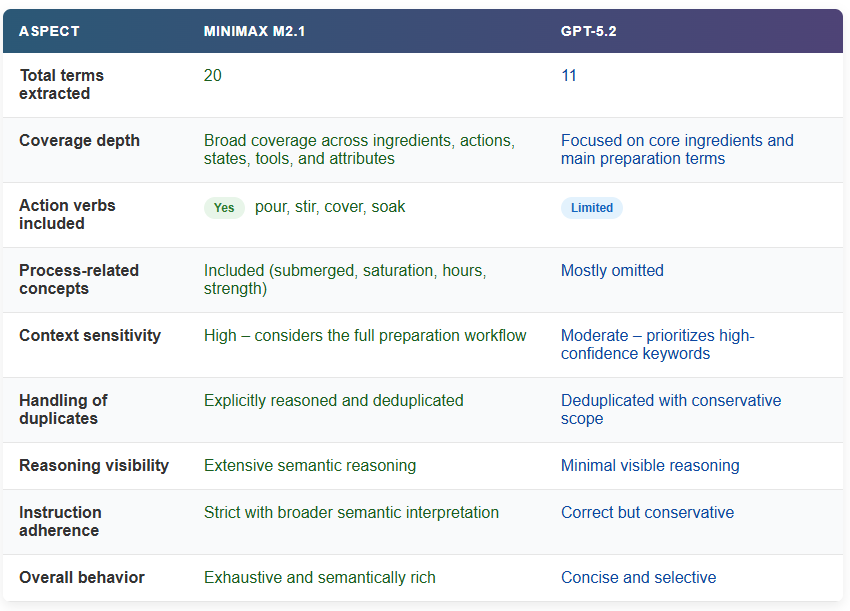

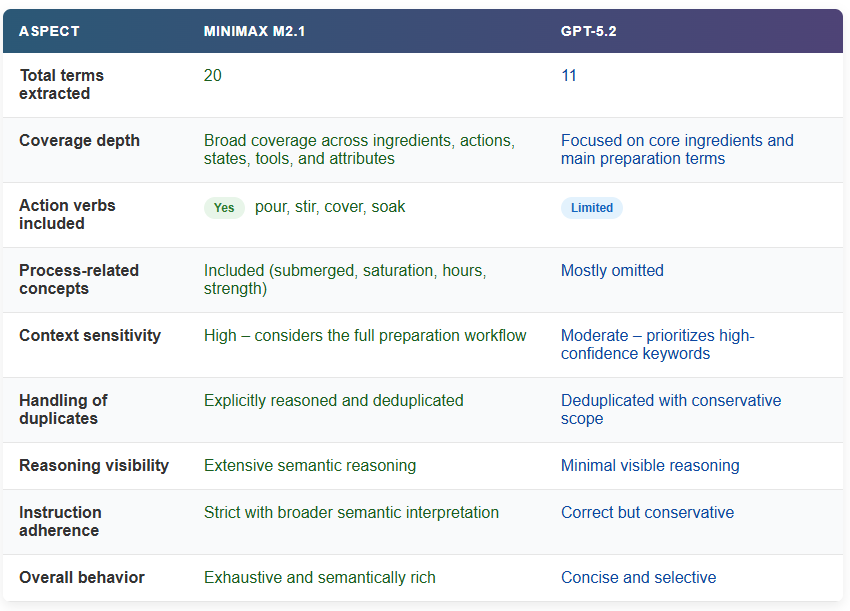

Finally, we compare MiniMax M2.1 with GPT-5.2 using a compact multilingual instruction-following prompt. The task requires the model to identify coffee-related terms from a Spanish passage, translate only those terms into English, remove duplicates, and return the result in a strictly formatted numbered list.

To run this code block, you’ll need an OpenAI API key, which can be generated from the OpenAI developer dashboard.

from getpass import getpass

os.environ[‘OPENAI_API_KEY’] = getpass (‘Enter OpenAI API Key: ‘)

¡Preparar café Cold Brew es un proceso sencillo y refrescante!

Todo lo que necesitas son granos de café molido grueso y agua fría.

Comienza añadiendo el café molido a un recipiente o jarra grande.

Luego, vierte agua fría, asegurándote de que todos los granos de café

estén completamente sumergidos.

Remueve la mezcla suavemente para garantizar una saturación uniforme.

Cubre el recipiente y déjalo en remojo en el refrigerador durante al

menos 12 a 24 horas, dependiendo de la fuerza deseada.

“””

prompt = f”””

The following text is written in Spanish.

Task:

1. Identify all words in the text that are related to coffee or coffee preparation.

2. Translate ONLY those words into English.

3. Remove duplicates (each word should appear only once).

4. Present the result as a numbered list.

Rules:

– Do NOT include explanations.

– Do NOT include non-coffee-related words.

– Do NOT include Spanish words in the final output.

Text:

<{input_text}>

“””

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model=”gpt-5.2″,

input=prompt

)

print(response.output_text)

client = anthropic.Anthropic()

message = client.messages.create(

model=”MiniMax-M2.1″,

max_tokens=10000,

system=”You are a helpful assistant.”,

messages=[

{

“role”: “user”,

“content”: [

{

“type”: “text”,

“text”: prompt

}

]

}

]

)

for block in message.content:

if block.type == “thinking”:

print(f”Thinking:\n{block.thinking}\n”)

elif block.type == “text”:

print(f”Text:\n{block.text}\n”)

When comparing the outputs, MiniMax M2.1 produces a noticeably broader and more granular set of coffee-related terms than GPT-5.2. M2.1 identifies not only core nouns like coffee, beans, and water, but also preparation actions (pour, stir, cover), process-related states (submerged, soak), and contextual attributes (cold, coarse, strength, hours).

This indicates a deeper semantic pass over the text, where the model reasons through the entire preparation workflow rather than extracting only the most obvious keywords.

This difference is also reflected in the reasoning process. M2.1 explicitly analyzes context, resolves edge cases (such as borrowed English terms like Cold Brew), considers duplicates, and deliberates on whether certain adjectives or verbs qualify as coffee-related before finalizing the list. GPT-5.2, by contrast, delivers a shorter and more conservative output focused on high-confidence terms, with less visible reasoning depth.

Together, this highlights M2.1’s stronger instruction adherence and semantic coverage, especially for tasks that require careful filtering, translation, and strict output control.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.