Meta AI Released MobileLLM-R1: A Edge Reasoning Model with less than 1B Parameters and Achieves 2x–5x Performance Boost Over Other Fully Open-Source AI Models

Meta has released MobileLLM-R1, a family of lightweight edge reasoning models now available on Hugging Face. The release includes models ranging from 140M to 950M parameters, with a focus on efficient mathematical, coding, and scientific reasoning at sub-billion scale.

Unlike general-purpose chat models, MobileLLM-R1 is designed for edge deployment, aiming to deliver state-of-the-art reasoning accuracy while remaining computationally efficient.

What architecture powers MobileLLM-R1?

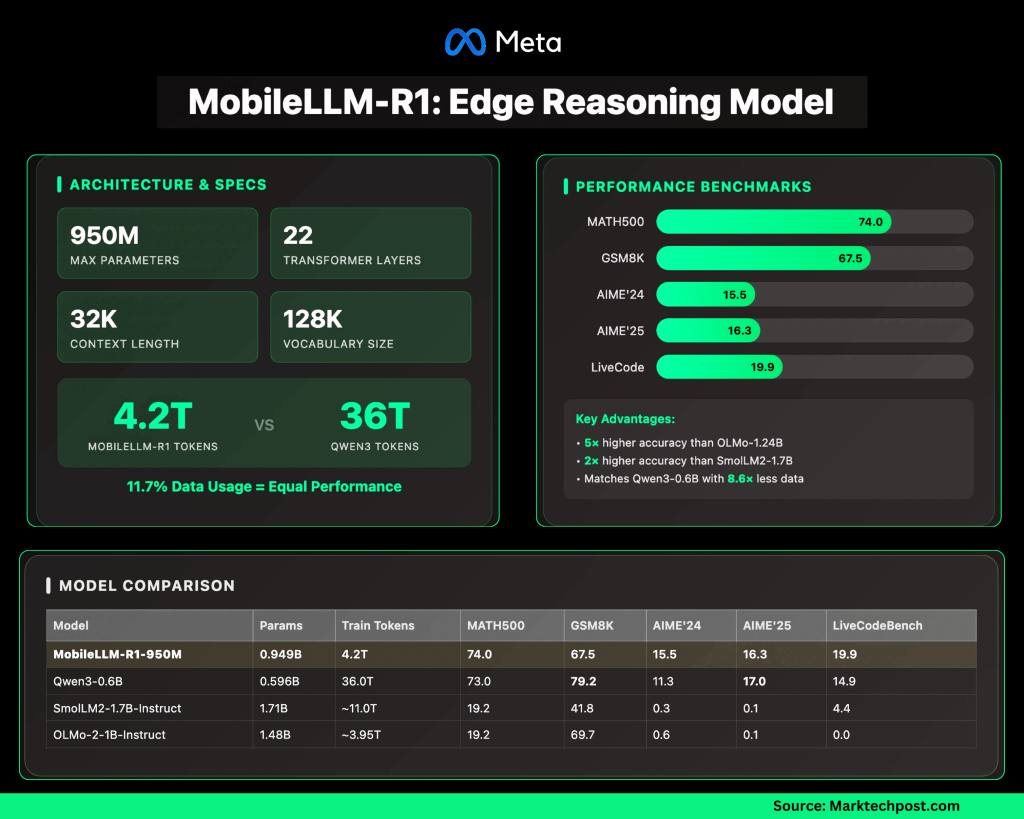

The largest model, MobileLLM-R1-950M, integrates several architectural optimizations:

22 Transformer layers with 24 attention heads and 6 grouped KV heads.

Embedding dimension: 1536; hidden dimension: 6144.

Grouped-Query Attention (GQA) reduces compute and memory.

Block-wise weight sharing cuts parameter count without heavy latency penalties.

SwiGLU activations improve small-model representation.

Context length: 4K for base, 32K for post-trained models.

128K vocabulary with shared input/output embeddings.

The emphasis is on reducing compute and memory requirements, making it suitable for deployment on constrained devices.

How efficient is the training?

MobileLLM-R1 is notable for data efficiency:

Trained on ~4.2T tokens in total.

By comparison, Qwen3’s 0.6B model was trained on 36T tokens.

This means MobileLLM-R1 uses only ≈11.7% of the data to reach or surpass Qwen3’s accuracy.

Post-training applies supervised fine-tuning on math, coding, and reasoning datasets.

This efficiency translates directly into lower training costs and resource demands.

How does it perform against other open models?

On benchmarks, MobileLLM-R1-950M shows significant gains:

MATH (MATH500 dataset): ~5× higher accuracy than Olmo-1.24B and ~2× higher accuracy than SmolLM2-1.7B.

Reasoning and coding (GSM8K, AIME, LiveCodeBench): Matches or surpasses Qwen3-0.6B, despite using far fewer tokens.

The model delivers results typically associated with larger architectures while maintaining a smaller footprint.

Where does MobileLLM-R1 fall short?

The model’s focus creates limitations:

Strong in math, code, and structured reasoning.

Weaker in general conversation, commonsense, and creative tasks compared to larger LLMs.

Distributed under FAIR NC (non-commercial) license, which restricts usage in production settings.

Longer contexts (32K) raise KV-cache and memory demands at inference.

How does MobileLLM-R1 compare to Qwen3, SmolLM2, and OLMo?

Performance snapshot (post-trained models):

Key observations:

R1-950M matches Qwen3-0.6B in math (74.0 vs 73.0) while requiring ~8.6× fewer tokens.

Performance gaps vs SmolLM2 and OLMo are substantial across reasoning tasks.

Qwen3 maintains an edge in GSM8K, but the difference is small compared to the training efficiency advantage.

Summary

Meta’s MobileLLM-R1 underscores a trend toward smaller, domain-optimized models that deliver competitive reasoning without massive training budgets. By achieving 2×–5× performance gains over larger open models while training on a fraction of the data, it demonstrates that efficiency—not just scale—will define the next phase of LLM deployment, especially for math, coding, and scientific use cases on edge devices.

Check out the Model on Hugging Face. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.